A digital installation across many mobile devices

On and off over the past few months I have been hatching and nurturing an idea to use mobile devices to create a joined-up experience across several device screens; something that connects people and their devices in one place as a digital art project.

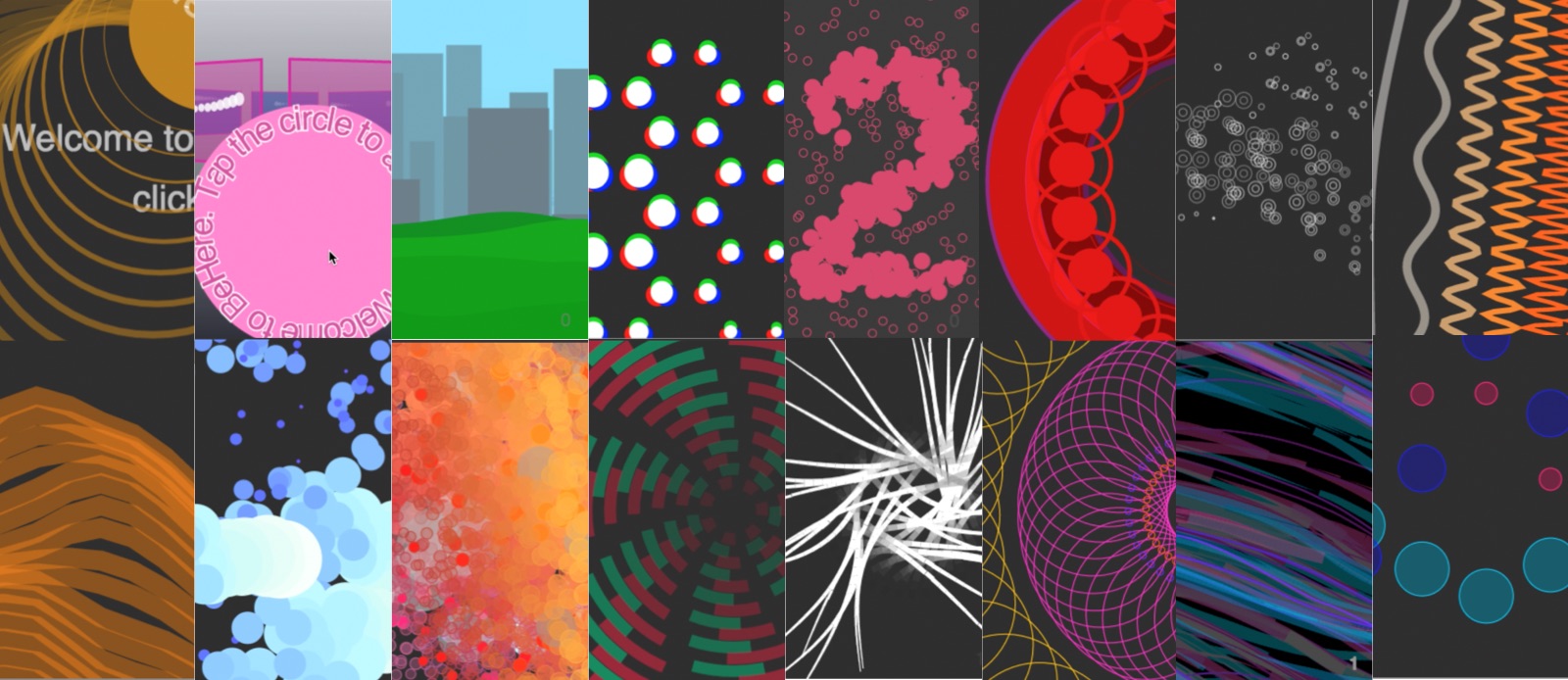

Since discovering Processing, p5 and Dan Shiffman’s Coding Train channel, I have experiemented with many discrete generative and algorithmic creative coding ideas, but I lacked a way to bring any of them together in a narrative, and in an engaging experience for an audience. BeHere starts with an idea that these generative visuals can extend across multiple screens, and effects can flow from one to another, even looping around continuously.

Several concepts, and technical components are needed to enable this.

1. Algorithmic visualisations

I already have many examples of a full-screen canvas on a mobile web browser that can execute animated, algorithmically generated visual effects, through the p5.js library. These need to be encapsulated into the idea of a “theme” within BeHere, that can be loaded and influenced by parameters and the flow of particles (dicsussed below). I will not discuss these individual themes, or the techniques used in them here.

2. Backend for connection, coordination, messaging

The individual devices each download and excute a copy of the same Javascript code, but in order to connect them they need a common platform that knows they are connetced, can send and receive messages, and coordinate triggers and flows between the devices. Node.js and Socket.io provide the mechanics for this. On top of node I have created a connection manager and a leave/join mechanism so that we know which devices are in the ring, and their relative position. Position is important as we want an effect to flow (for example) from the right hand side of one screen and appear on the left hand side of the next. Since we cannot sense the relative position of physical devices we must indicate to users joining which position they must take. Of course there is no reason from an art/experience point of view we cannot play with the ordering, but for now lets assume we want to maintain the logical order of devices.

3. Triggering visual themes

We have devices, each with a visual capability, connected in some order. The p5 visual sketches are created as themes, with one theme active at any one time. All devices load the code for all themes when they connect. The Node server triggers the start of a theme through a message to the devices, including passing parameters so that the visuals can be influenced, to a greater or lesser extent, and either homogenised across devices, or left to alter themselves independently through response to the device, random influences, or central parameters.

4. Particle flow, real-time messaging

So we now have a set of connected devices, each rendering their own visuals independently, from a common code base, with triggers to change the visual theme governed by the server. At this point there is no flow or messaging between devices. This last part comes from the idea of having particles flow across the space of each device, and between devices. These can be visible and implemented directly in the algorithm of the visualisation code, or hidden and subtly influencing elements of the visuals, or even ignored altogether. When particles reach a left or right boundary, they advertise their position to the server, which passes them on to the next device in the ring. This device then picks up the position and moves it according to its own algorithm, until it again exits the visible space. This simulates the flow between devices, as through each screen shows a window onto a continuous, flowing spectacle.

5. Central coordination, local rendering

All of the algorithms and visual code are governed by the devices, including the position and movement of particles. The server performs more mundane mechanics, managing connections, passing particle data, and managing the timing, order and parameters for theme changes (collectively called the narrative). To this extent, particles and rendering across an older device are likely to be slower than on a newer one, and that will affect the flow across the entire ring. The fact that the actual rendering might be less performant on one device does not affect the rendering on any others.

In the current implementation, the narrative is predetermined (it need not be), but the rendering will be different on each device every time it is executed, due to the parameters, the flow of particles, and the use of random/noise elements.

There are no limits on the number of themes that can be included, the richness or variety of elements/parameters in a given theme, the relationship between moving particles and the curren theme, or the order and parameters driving theme changes. This gives me a pretty rich platform to experiement with. For the current implementation of BeHere, I have used themes and a narrative which takes the assembed viewers on a journey together. They are physically in one place to take part, but together they are taken somewehere else.

6. Infrastructure

To get the whole installation out of the laptop lab and into the world, I needed a wireless network, local DHCP and local DNS (so I could use local, non-internet URL’s) and a server capable of running Node.js and the libraries/packages I needed, plus a regular web server to accept and redirect initial web connections on port 80.This took the form of a Raspberry Pi Zero, plus a small and cheap WiFi router. The Pi performs most of the work, including DNS, DHCP, Apache Web server, and the Node components. In spite of its tiny size (less than 70mm x 30mm uncased), it is more than capable of running all the realtime code without any performance challenges. Together with the router, the whole package is around 100mm x 100mm x 40mm plus cables, and needs just two Micro USB cables for power to run.

Visitor experience

A visitor is initailly taken to a landing page that is not part of the application. This is so that the initial page can be a regular http port 80 page. When they click through from this page they load the web application proper, and are now talking to the node server, and establishing a socket connection. They are invited to attach, and given some simple instructions. When they request to attach to the ring, they are allocated a position, and their own and their neighbours screens illuminate to indicate physically where they should join (to maintain the natural order and so “flow” works correctly). All screen content is now goverend by the current theme and they are now part of the BeHere experience.

Technical notes

Joining the ring

Originally I probably over-enginered the connection manager and the leave/join process. It included a request to join, allowing other visitors to permit the new joiner, and the joiner then accepting one of various offers they received. The join request is now answered by the server, and the users is simply inserted into the ring, with their position indicated by illuminating the screens of their new neighbours.

Themes

Each theme is a p5 sketch with various algorithmic visual effects, which use (or don’t use) server provided parameters, and the positions and numbers of particles currently in the vicinity. Themes continue to run until instructed to switch theme by the server. In a couple of instances a theme has its own natural end and requests that the server trigger the new theme.

Narrative

The narrative is a JSON file, loaded by the server at startup, that identifies the themes, their duration, and any parameters that should be passed to the clients.

When a client connects, it is instructed to load the current theme and from there on is triggered to load the next theme when the server determines that all devices need to shift. As some themes are more sensitive to the number of particles in play, one of the parameters is to either reduce the number or seed new ones. As mentioned before, the path of the particles is governed by the device algorithms.

When the last theme in the narrative is reached the server loops back to the start. The narrative runs independent of any client devices being connected.

Performance

Server. The server’s main job is to manage connections, pass messages through sockets, and manage the timing and triggering of themes. None of these are especially demanding. Then there are many particles in play and especially as these leave the space of one device and enter another, there can be a peak of socket traffic. This still demands very low attention from the CPU and network, so a raspberry Pi has been easily able to handle the server side.

On the client side there are more variables. The very nature of algorithmic art tends towards many features animated many times per second. The complexity of the algorithm can often means substantial calculations and multiple loops per instance per frame. This can create very rich and pleasing visuals, but can force the browser to render at fewer frames per second, which diminishes the experience. Given that we want the BeHere expereince to be as inclusive as possible, I have avoided making assumptions that devices are the highest performance. The following measures have helped reduce the processing and rendering burden.

- for themes with complex components (shapes made of many vertices for example) simply reduce the number of vertices (possibly with reduced “smoothness”)

- reduce the target framerate to 30 (instead of the standard 60).

- reduce the pixel density to 1 – this means that very high density displays will lose some of their advantage, but for any themes that use pixel arrays, this reduces processing by a factor of 4.

- avoid the use of collision detaction between elements

- maximise the effect per element, rather than having more elements in play

- where particles are particularly material in the algorithm, try to control the number of particles in play on any one screen.

I am not a massively experienced coder and there will be inefficiencies in my code that could be improved upon.

No comments yet.