I’ve always been amazed at the artistic creations that are put on for festivals, more or less as disposable creations that will exist for the 3-4 days of the festival and yet which may have taken weeks of design and creation. They are always worth it.

I’ve always been amazed at the artistic creations that are put on for festivals, more or less as disposable creations that will exist for the 3-4 days of the festival and yet which may have taken weeks of design and creation. They are always worth it.Last year I attended a friend’s mini, private festival and wondered whether I could bring some of my nascent coding skills to bear to make some sort of technology, interactive art installation I italicised art as it is more a visual confection than a true work of art. The question is – could I do enough in the limited time available to create something in code, and manage the infrastructure for it to work onsite.

The answer is: there is only one way to find out. At the start of May I set out to create a two part installation, involving a laptop, projector and a couple of means for audience interaction. I decided I would use Processing, and its javascript cousin, P5JS as I am reasonably familiar and they lend themselves to nice visualisations.

The plan was to spend May on project1- WePilgrims: a set of visualisations created by interactions from the audience’s devices. By joining a wifi LAN and hitting a locally hosted web page, they would be able to control various aspects of a jointly-generated set visual elements.

June is reserved for a visualisation based on images and movements sourced from a Kinnect (Xbox 360 variety). This one I call SeePilgrims.

July (leading up to the festival) I need to sort out the infrastructure and try the whole setup on scale and with load testing. Inevitably all of the code will need a good tweek to work out of the lab. I am not, after all, a software engineer.

So for WePilgrims, I am using a Node.JS server on the Mac to serve web pages to the individual clients and to a display screen which is what will be projecting the visualisation of what happens.

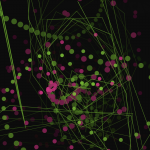

Each client device loads a web page with a simple interface, a traceable, tappable area, and a few option buttons to change colours and effects. These interactions are sent to the Node Server over socket.io connections as JSON objects. These are immediately forwarded onto the display without interference by Node. The Node server is really just there to establish and maintain connections, and manage disconnections.

The socket.io stream to to the display web page contains all the JSON objects from all the clients. The clients give a visual feedback that they have touched or moved, but the user has to work out how that is realised in the display.

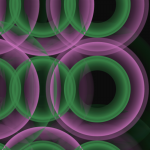

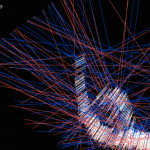

The display itself is a P5JS rendered canvas. There are multiple themes, each of which will interpret the user interactions in entirely different ways.

One user may trigger new visual items, while other may control existing items or the overall display with effects, parameters. It is deliberately a little bit generative and non-deterministic.

Although May is over, work on this is not complete. I have around 6 themes, but these have yet to be matured and the overall controls and flow are not yet in place.

As it is June I have started work on SeePilgrims. For this I am using classic Java based Processing as it has an API for processing that is well documented. Some of the previously available OpenNI API for Kinnect is only partially available and documented since it was acquired.

See Daniel Shiffman’s tutorials and documentaion on Kinnect for Processing at http://shiffman.net/p5/kinect/.

There is plenty here that is not fully API’d compared with the official API’s, such skeleton detection , which you really need to make your on screen avatar fully interactive. But you can access the distance map and create a 3D point cloud. From here there are plenty of visualisations you can do that will be ample for my art installation. Again my aim is to have a number of themes that the program will cycle through, each taking the same inputs to a very different visual outcome.

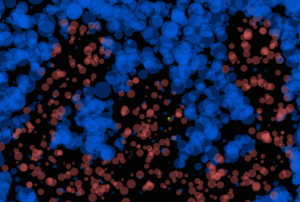

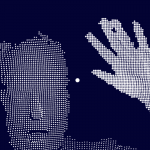

For example, for a couple of themes flatten the point cloud so I essentially have a 2D, moving human outline, separated from the background. I then use this for some collision detection to render the human figure separate from the background.

I use the point cloud depth dimension to give me a half-tone value. This kind of works like electronic pin-art.

In another one I take the point cloud and rotate it in 2 dimensions while rendering a few different effects. All the while these points are moving in reflection of the audience’s actions.

Each theme has a number of parameters effecting how it renders. because the audience cannot easily control anything ( I don’t have the skeleton control of the Kinect and the user has no mouse or access to controls) I will vary these over time to give effects.

I want to take some of the work from Shiffman’s Nature of Code book and get this to work with the Kinnect figure detection, but that is proving to be tricky and time consuming. We shall see.

The aim of all this is not for any particular visualisation to be totally stunning, rather, for the overall effect and experience to be fun and inspiring. It is more important that I ship something that works rather than something that is perfect or cute. In my three years of University of Dave I have not really shipped anything as a final product, so this has to be my first.

Fingers crossed.

No comments yet.